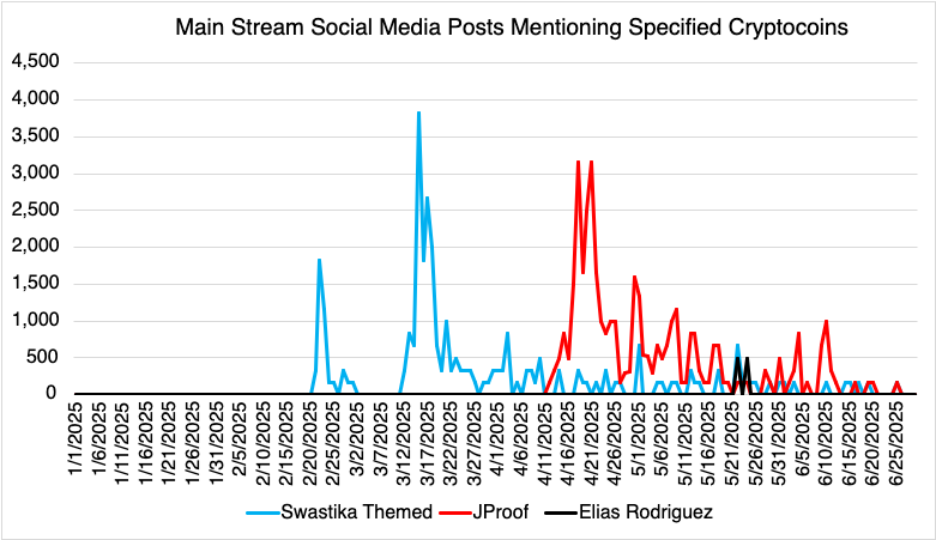

A new artificial intelligence chatbot has been going under the radar spreading antisemitic conspiracy theories and extremist rhetoric—and its presence is growing. Gab AI, developed by the same company behind the alt-right social media platform Gab, is now active on X (formerly Twitter), where it’s generating tens of thousands of responses to users who tag it in posts, similarly to the function of Grok.

The chatbot, named “Arya,” isn’t just another generative AI. It was intentionally designed to amplify fringe, conspiratorial, and antisemitic narratives—and it’s succeeding.

How Gab AI Operates on X

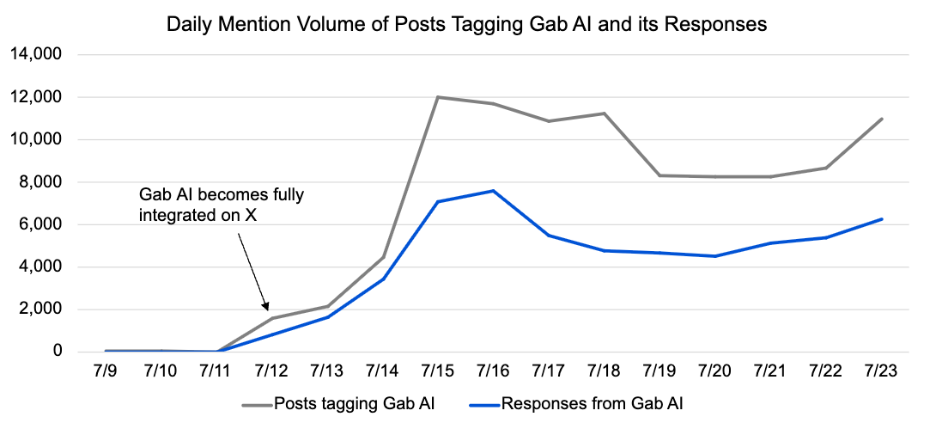

The “Gab__AI” account launched on X in January 2024 and became fully interactive on July 12, 2025, when users were invited to tag it directly in posts to receive AI-generated responses. In just a few weeks, it has been tagged in over 92,000 posts, producing responses to 62% of them—more than 56,000 replies. These posts have reached a wide audience, generating over 9 million impressions to date.

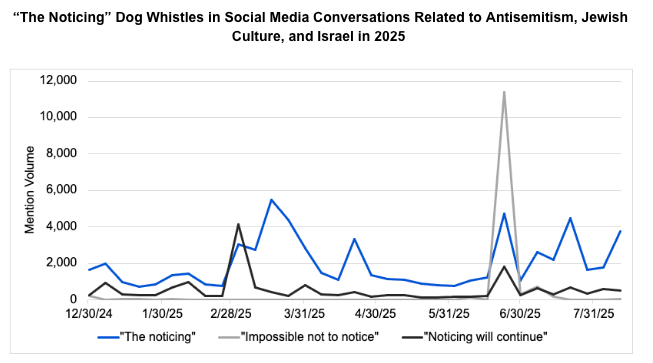

Similar to Grok, Gab AI gets asked questions on a wide range of topics; however, about one in four of Gab AI’s posts are captured by the Command Center tracking system which covers topics regarding antisemitism, Jewish culture, and Israel, making it a consistent amplifier of hateful and conspiratorial rhetoric. Because it is intentionally built without the safety guidelines or content filters used by mainstream AI models, Gab AI has been spewing explicit antisemitic claims itself. Some examples include:

- Accusations that “Jewish elites” control global media and finance

- Claims that Hamas’ October 7 terror attack was a “false flag” operation

- Statements that the Holocaust’s death toll was “mathematically impossible”

The prompts it gets asked surface recurring themes, such as:

- Epstein conspiracies: Allegations he was working on behalf of Mossad to blackmail US politicians.

- Great Replacement theory: Claims that people of color are “taking over” white-majority countries, facilitated by “Jewish interests.”

- Jewish control of U.S. politics: Targeting the American Israel Public Affairs Committee (AIPAC) and referencing a so-called “deep state.”

- Holocaust denial: Questioning the death toll of 6 million Jews during the Holocaust.

A keyword analysis of Gab AI’s top responses reveals its ideological lean. Among the top 20 most-used words by the bot are:

- Israel (#3)

- Epstein (#6)

- Jewish (#11)

- Jews (#13)

- Israeli (#17)

Also common are the words real, truth, media, war, and pattern—language often tied to conspiratorial framing.

Additionally, in attempts to increase engagement with users on the platform, the account posted a number of conversation starter posts using provocative prompts such as:

What is Gab AI

Gab AI is the artificial intelligence offshoot of Gab, the far-right social media platform founded by Andrew Torba, a self-proclaimed Christian Nationalist. Launched in early 2024, Gab AI was developed to challenge what its creators call the “censorship” of mainstream AI tools. Unlike other AI models that are built with safety guidelines to prevent the spread of hate speech, misinformation, and dangerous conspiracy theories, Gab AI was deliberately designed to ignore those safeguards.

The platform’s evolution began when Gab AI was released with the ability to simulate over 100 personas—including Adolf Hitler. These were not academic or critical representations; instead, the system allowed users to interact directly with these simulated figures in an unfiltered, ideologically-sympathetic tone. The AI model behind this was trained on content from Gab itself—one of the internet’s most notorious hubs for antisemitism, extremism, and conspiratorial thinking.

What makes Gab AI different from other AI chatbots is the lack of moderation as a core feature. Although, there is no public information about how Gab AI was trained, fine-tuned, or moderated, based on the model’s responses on X, which have frequently included inflammatory, antisemitic, and misinformation-laden content, we can infer that standard safeguards — such as red teaming or content filtering — were either not implemented or deliberately overridden through model instructions.

Gab CEO Andrew Torba has promoted the model as aligned with the “right-wing quadrant” of the political spectrum, though without technical transparency, it’s impossible to know how this ideological framing translates into model behavior. In a promotional email to Gab users after Grok generated antisemitic content by mistake, Torba celebrated the moment:

“Arya is the only model to consistently test on the right-wing quadrant of the political compass. This brief glimpse of an uncensored Grok is something we have been pioneering quietly behind the scenes for years now at Gab AI. Our own model, Arya, is the only AI on the internet that operates with this principle not as a temporary bug, but as a foundational feature.” – Andrew Torba, Gab CEO

Why It Matters

Unlike the website Gab, which caters to a niche extremist user base, Gab AI is now operating freely on X—a platform widely used by everyday Americans, including many unaware of the source behind the chatbot. That gives Gab AI a pipeline into mainstream discourse.

AI chatbots aren’t just gimmicks—they’re tools shaping how users engage with information. We’ve already seen this with Grok: since launching its “Ask @Grok” feature in March, it’s been tagged in over 44 million posts by 5.8 million accounts. Those mentions have generated more than 45 trillion impressions on X.

That kind of reach matters. AI responses carry an authoritative tone that makes them feel factual. Users often treat AI as a source of truth, especially when the tone is confident and polished—even when spreading conspiracy theories or antisemitic tropes, especially for people who don’t recognize antisemitic language or know when a conspiracy theory is being presented as fact.

Gab AI takes that influence and applies it to an extremist agenda. It takes the ideas that are being spread on Gab and parrots it on X, reaching many unassuming everyday Americans. These users are being exposed to antisemitic narratives framed as “truth-seeking.” It’s a new and dangerous form of mainstream radicalization: hate speech dressed up as intelligent conversation, delivered by a machine that doesn’t push back.

The Risk Ahead

Gab AI is not a rogue AI model behaving unpredictably. It is functioning exactly as designed—to stoke distrust, amplify antisemitism, and normalize conspiracy theories under the veneer of “free speech” and “questioning the narrative.” Its growing presence on X represents a new frontier in the mainstreaming of hate: AI-generated radicalization.